In last month’s blog article I described how to import data from Visual Studio Online into time cockpit. In contrast to the usual pull approach, I showed how to import data into time cockpit with Webhooks using a push approach. In this article I want to talk about how to integrate on premise systems like Microsoft Dynamics Navision or CRM with time cockpit using Hybrid Connections.

Recently, we introduced time cockpit at a customer who runs an on-premise Navision system. On-premise applications like Navision or CRM often do not provide interfaces like REST APIs or Webhooks to access their data from outside the company network. Often, such on-premise ERPs are deeply rooted in our companies and contain vital data for running the business. Replacing them or migrating them to the cloud would mean an immense effort.

So if you ask yourself how your existing on-premise infrastructure might go together with the Software-as-a-Service (SaaS) story without migrating all your data to the cloud, this article might provide some useful information for you.

Data Ownership

As described in the last article of the series, time cockpit usually does not exist on its own, but uses master data (e.g. customers, projects, orders) from upstream systems which drive time cockpit. Maintaining data in the on-premise ERP and time cockpit would be redundant and would lead most likely to inconsistencies. As far as I see it, the only reasonable way to handle the issue is to define one leading system for the different categories of data, e.g. the on-premise ERP for master data and time cockpit for all time tracking and working time related data.

Data Integration - The Classic Way

As you may know, time cockpit is Software-as-a-Service (SaaS). It is hosted in Microsoft Azure, Microsoft's high-secure and high-available cloud computing platform. So the question is: How do you get data from the on-premise installation of your ERP-system into the time cockpit database in the cloud?

In the past we implemented small commandline helper tools that customers hosted on one of their on-premise servers. These helpers fetched data from the on-premise system, opened an out-bound connection to the time cockit database and loaded the data into the time cockpit database. The drawbacks are:

- On-Premise: The customer is forced to host the commandline tool on an on-premise application server. But isn't SaaS all about not having to maintain and run server infrastructure on premise? The customer has decided to use time cockpit because it is SaaS. Now he is forced to provide hardware again which needs to be maintained. Not a nice story...

- Development: Usually, we develop the small commandline helper tools that import data for our customers. However, after developing we need to test the program. Since customers – most understandably – do not treat giving VPN access to service providers like us lightly, it is difficult and time consuming to test an import.

- Maintenance: Since the commandline tool runs on-premise, it is quite hard for us to debug issues if they arise (and trust me, they will arise). That leads to an increased effort on the customer’s and the service provider’s side.

Using Hybrid Connections to Link the Cloud and Local Servers

Given the arguments above, we recently switched our strategy when it comes to importing data from on-premise systems. We still write small commandline helper tools that fetch data from an on-premise ERP and import it in time cockpit's database, but the tools of choice have changed.

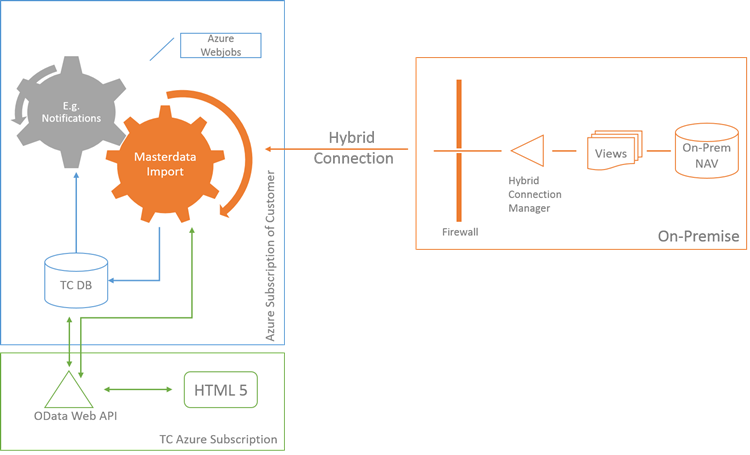

- Instead of deploying the import tools to a customer's server, we implement Azure WebJobs using the .NET platform. You can find more information on Azure Webjobs in the last blog article of that series.

- Instead of deploying our data access layer (technically .NET assemblies that become part of the commandline tools) to a customer's server, we use time cockpit's OData Web API. The OData Web API wraps our data access layer and provides a uniform, platform-independent way to access your data in time cockpit. The benefits of that approach are obvious. The customer does not have to care about the data access layer version that is currently deployed. If we make changes to the data access layer, the customer will most likely not be effected. The solution is much more robust.

- To access On-Premise data, we set up Hybrid Connections.

Hybrid Connections

As Microsoft puts it,

"...Hybrid Connections provide an easy and convenient way to connect Azure Websites and Azure Mobile Services to on-premises resources behind your firewall..." [Source: http://azure.microsoft.com/en-us/documentation/articles/integration-hybrid-connection-overview]

[Source: http://azure.microsoft.com/en-us/documentation/articles/integration-hybrid-connection-overview]

In terms of time cockpit, we usually create a Web App (a.k.a Web Sites) via the new Azure Portal. At the time of this writing, you can create a free web app that does not cost you anything. Of course, you do not get high performance, but chances are that you do not need it anyway. Usually, imports from an on-premise system into time cockpit are not time critical and run at night. It does not matter if an import runs 2 seconds or 5 minutes.

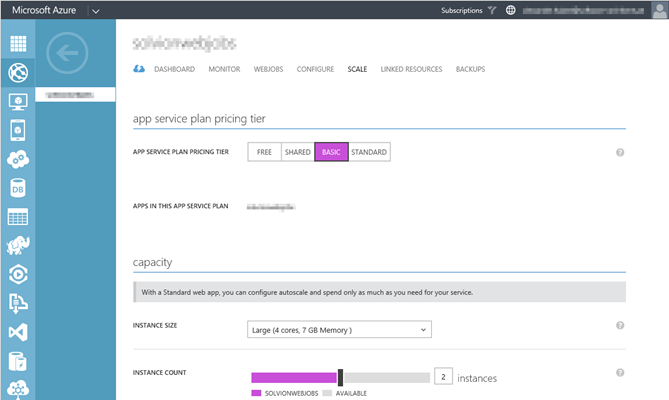

The nice thing is that if you need more performance you can easily scale-up or scale-out.

For one of our customers, we imported around 150K time sheet entries from the old time tracking system + 13K tasks. For importing the time sheet entries, we just scaled up the web job and the SQL database. After the import finished successfully, we scaled down the web app to a free version again.

Besides hosting the web job, the web app is also the container for the hybrid connection. For a detailed description of how to set up a hybrid connection, please refer to this excellent arcticle: http://blogs.msdn.com/b/biztalk_server_team_blog/archive/2014/05/13/hybrid-connections-preview.aspx.

Basically, the hybrid connection consists of two parts. First, you create the hybrid connection in your web app and tell it to which host it should connect. The host is your on-premise system. Second, you need to install a utility, the hybrid connection manager, on your on-premise server. With these two components you have set up your own small private network that connects your company network with Microsoft Azure.

You can access a database or other server resource as if they would be in the same network. The customer can keep his ERP system on-premise, our time cockpit import tasks run in the cloud and can access them.

Conclusion

A lot of our customers still run Microsoft Dynamics Navision or CRM on-premise. These systems provide existing master data like customers and projects to time cockpit. Those on-premise systems often cannot easily be replaced or migrated to cloud-hosted versions as this would mean a considerable investments for companies, especially if they have customized the systems to their needs. As far as I am concerned, hybrid connections are an elegant and easy to set up way to bring your on-premise systems in line with your SaaS strategy.

However, there are a few (security) considerations you should keep in mind:

- Restrict the access to your on-premise resources as far as possible. Do not open the door to your whole company network.

- If you use hybrid connections to access data in a database, let your customer create a dedicated DB user for you with limited user rights.

- If you access a database, let your customer create dedicated views that hold the information you need to import. This separates concerns and prevents the accidental alteration of data.

Interested?

If you feel that hybrid connections could help to streamline how you currently use time cockpit or your business, feel free to contact us at support@timecockpit.com.

comments powered by