Synchronization Performance

One of the main pain points our customers faced when using time cockpit was the initial time it takes time cockpit to setup. The main reason this took so long was a process called “blob syncing”. The blobs are the containers for the signal data collected by the signal tracker. Each blob is a single small container, usually only a few kilobytes in size and is stored in the blob storage mechanism of the data layer. The advantage of using blobs is that they are encrypted by a user specific password, which we refer to as signal encryption password.

When we initially designed time cockpit, we did not consider the possibility that users would keep their signal data forever. Rather we expected signals to be saved until the timesheets for the period of time (day, week or possibly a month) are created. We have learned over the past few years that for our customers the default is to keep all signal data rather than to remove it once it is not needed anymore. In case you did not know, you can delete your existing signal data from within time cockpit using the "Delete Signals" wizard:

Anyhow, to support this customer behavior, we improved the synchronization speed. While I hope that you do not have to initially synchronize time cockpit often, sometimes it is unavoidable, such as when setting up a new system.

BLOB Synchronization

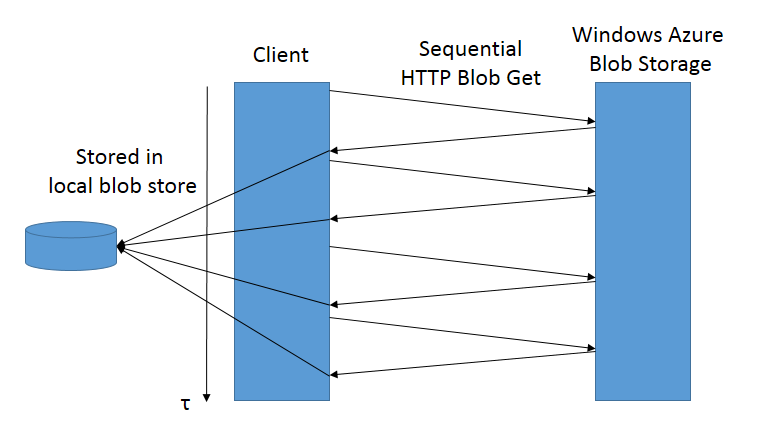

To explain why the synchronization of those blobs took so long, consider that synchronization of those blobs used to happen sequentially:

The time this process takes does not depend on the size of chunks, but mostly on latency: It takes some time for a HTTP request to reach windows azure blob storage and return the blob. Multiply that latency by the number of signal chunks (25000 after 3 ½ years of time cockpit for my user) and the reason for the slow sync process becomes clear.

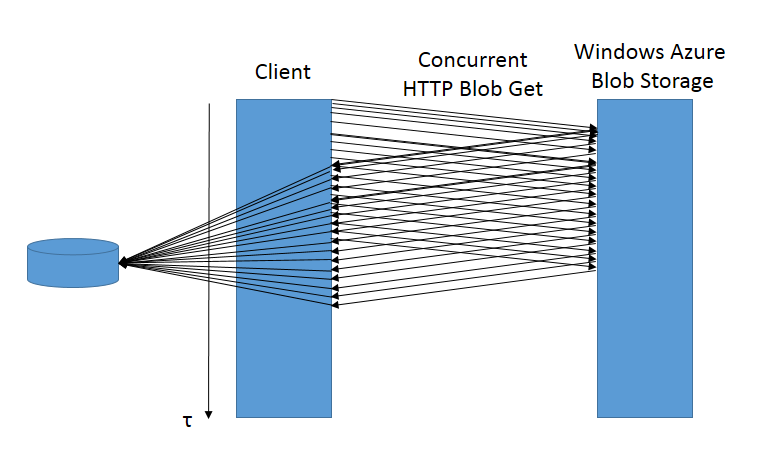

Fortunately we fixed that situation: The obvious optimization is to do is to request multiple blobs concurrently and to therefore hide the latency associated with such a request. And that is exactly what the improved sync does now:

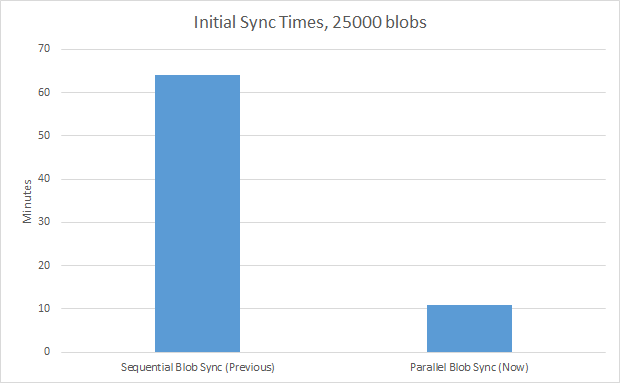

The end result is that initial syncs taking multiple hours are now a thing of the past. The time cockpit environment I use for production now syncs in 11 minutes instead of 65 minutes.

Other Synchronization Improvements

While working on the synchronization code we decided to include several other improvements besides the BLOB synchronization. Our main focus was on the initial synchronization for new users or devices. In this case we reduced the overall time required for synchronizing the data by not handling previously deleted elements and not looking at the local version information. These steps can be safely omitted for the initial creation of the local database.

An important fix which is not related to performance is the proper synchronization of a large number of changes containing cyclic relations. Some of our customers have configured entities containing relations on themselves. An example is the entity Task which could contain a relation Parent which is used to model hierarchies of issues or work items. To allow such a scenario the foreign key constraints of the cyclic relation had to be disabled or removed during the synchronization to avoid conflicts caused by data ordering issues. If a synchronization contained a large number of changes (larger than the synchronization batch size) this process sometimes failed when the constraint was reintroduced. This issue was resolved by not reintroducing the constraint until all batches for the entity have been processed.

Example Results

To get a better understanding of our synchronization performance and the speedup in version 1.14, we selected several customer accounts for benchmarking. The examples try to represent different scenarios where customers previously reported undesirable performance. All benchmarks were executed on a medium size Azure VM (2 cores, 3.5 GB memory) located in a different datacenter (to create a realistic latency between client and server database) using the local disk for storing the database and blobs. Each customer benchmark consists of an initial synchronization per time cockpit version to test.

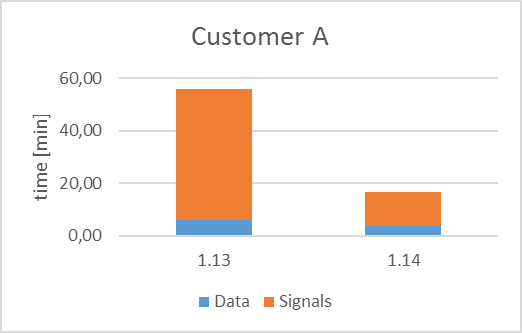

Customer A

The first example database contains timesheets for 5 people and 4 years and the account used for synchronization contained signal data for 3.5 years and a single device. This amounts to about 22.000 timesheets, 29.000 signal blobs and a total of 57.000 operations for an initial synchronization.

The following chart shows the initial synchronization time in minutes for time cockpit versions June 2013 (1.13) and July 2013 (1.14). The time is split into Data (Timesheets, Projects ...) and Signals (BLOBs).

The total time improved from ~56 to ~17 minutes (-70%). Synchronization of general data changed from ~6 to ~4 minutes (-36%). The significant number of signal BLOBs showed the biggest improvement and changed from an unbearable ~50 to ~13 minutes (-74%).

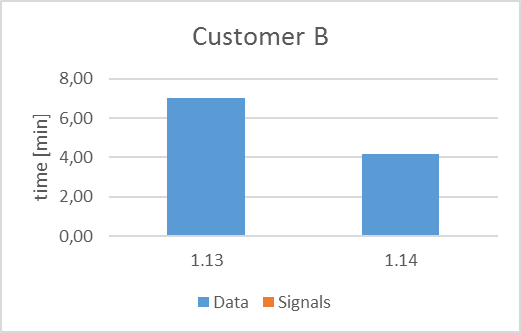

Customer B

The next scenario shows an account without existing signal data but a significant number of timesheets (~42.000) and other updates which results in a total of ~47.000 operations.

The initial synchronization time changed from ~7 to ~4 minutes (-41%).

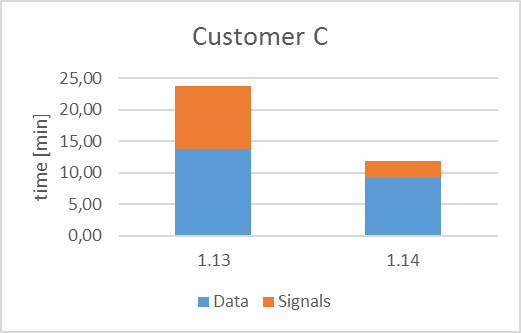

Customer C

The last example shows an account with a small to average number of signals (~5.000) and a very large number of timesheets (~81.000) and other data (~42.000) which results in a total of ~128.000 operations.

The total time of the synchronization changed from ~24 to ~12 minutes (-50%). Of that time the general data phase improved from ~14 to ~9 minutes (-33%) while the signal synchronization changed from ~10 to ~3 minutes (-73%).

Overall Result

The overall/average improvement for all benchmarked data was a change of -62% in total synchronization time, -74% for signal synchronization time and -36% for general data synchronization.

comments powered by